XAIface: Measuring and Improving Explainability for AI-based Face Recognition

Face recognition has become a key technology in our society, frequently used in multiple applications, while creating an impact in terms of privacy. As face recognition solutions based on machine learning are becoming popular it is critical to fully understand and explain how these technologies work in order to make them more effective and accepted by society.

In this project, we focus on face recognition technologies based on artificial intelligence and the analysis of the influencing factors relevant for the final decision as an essential step to understand and improve the underlying processes involved. The scientific approach pursued in the project is designed in such a way that it will be applicable to other use cases such as object detection and pattern recognition tasks in a wider set of applications.

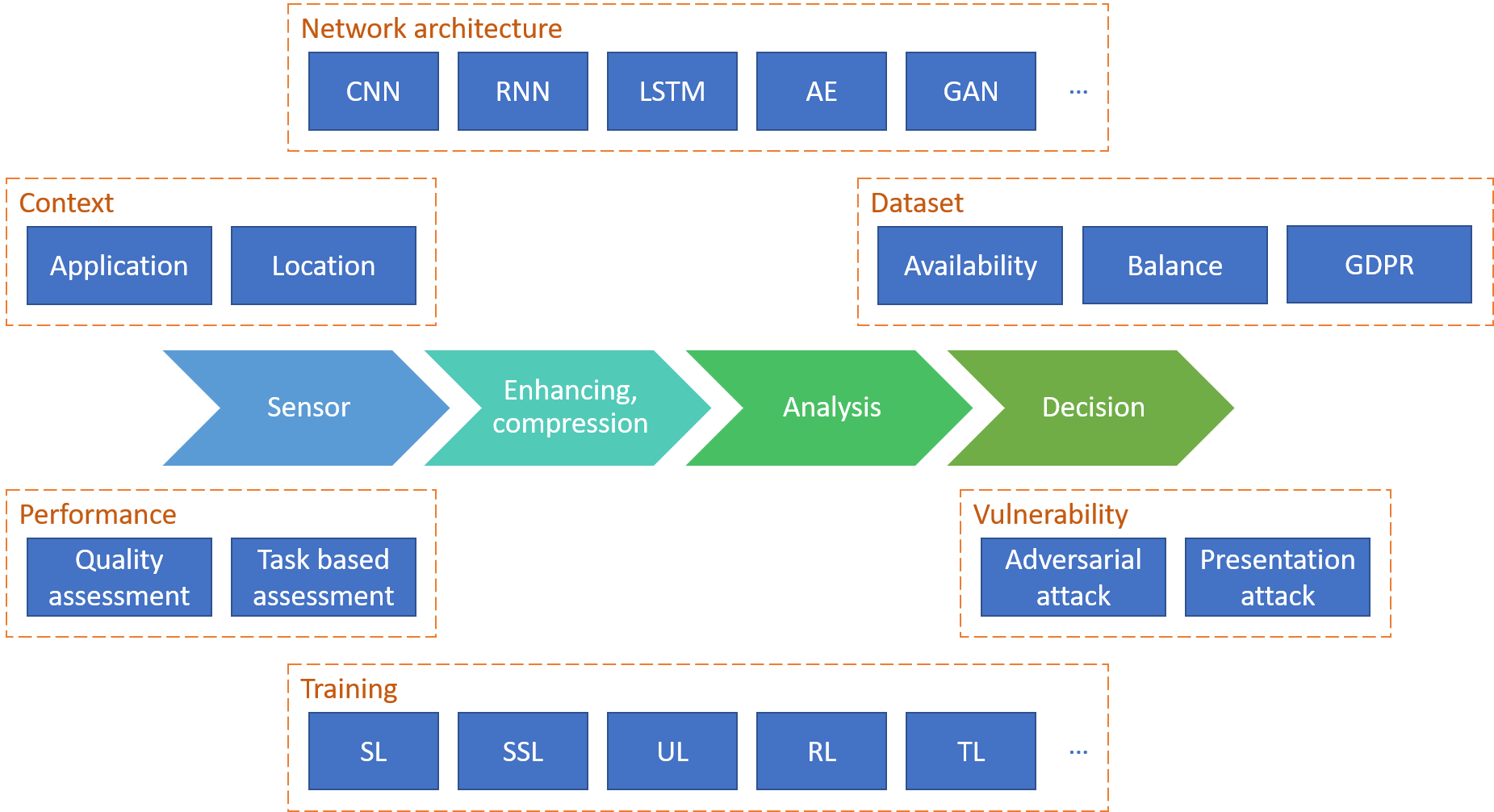

One of the original aspects of the proposed project is in its scientific approach which targets explaining how machine learning solutions reach effective face recognition by identifying and analyzing the influencing factors that play an important role in the performance of face recognition in the end-to-end workflow, and their impact on the system’s decisions. In fact, such performance largely depends on the acquisition, enhancement, compression, analysis and decision making processes adopted in the workflow of a face recognition solution. Machine learning is currently used in many of the stages of such a workflow and various factors such as the dataset used in the training process, the approach used for training itself, the architecture of machine learning, and the types of attacks and interferences are among influencing factors that contribute to the understanding and explainability of the complete system.